Computer Vision and Robotics Lab

The Computer Vision and Robotics Lab is a research group for active vision, real-time processing, neuromorphic engineering, and robotics.

Computer Vision and Robotics Lab

Dept. of Computer Architecture and Computer Technology

Universidad de Granada

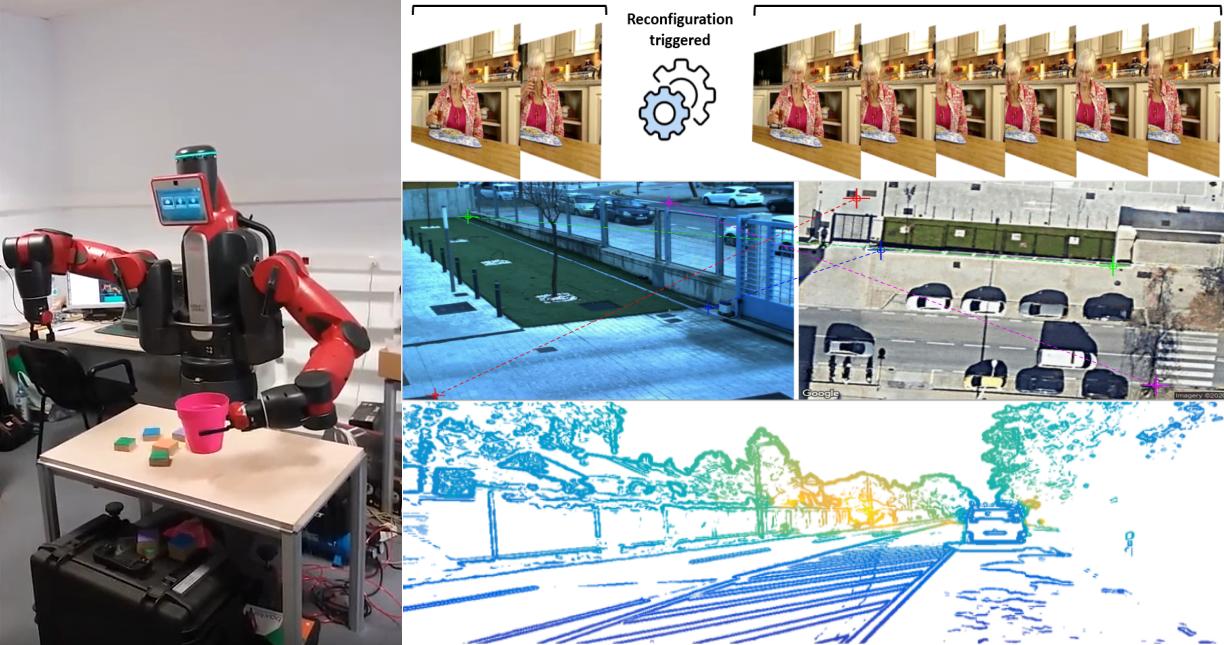

Welcome to the Computer Vision and Robotics Lab, part of the Department of Computer Architecture and Technology at the University of Granada. The Computer Vision and Robotics Lab focuses on the resarch on active vision, autonomous navigation, neuromorphic vision, control, perception - action loops, collaboration and robotics. We are not interested on passive vision sensors but rather on sensors that can actively change their point of view or perspective, the way they perceive their environment by adaptation or select the most important information driven by the task assuming that allows for smart decision making. To enable active vision, our research is also focused on architectures that achieve real-time performance.

We have long expertise on low-level image processing: we have done optical flow algorithms (energy based, gradient based, block matching, etc) and similar approaches for stereo models; we have also models for foreground/background substraction (in surveillance applications) and local contrast descriptors (pixel wise orientation, energy, intrinsic dimensionality, etc). We are also interested in multi-perspective vision, autonomous navigation, or structure from motion, specially any processing from motion that can be achieved in real-time. We have worked in different fields bus specially on ADAS (Advanced Driving Assistance Systems for cars), autonomous navigation with UAVs, video-surveillance applications, robotics.

Projects

Drone control based on vision and gestural programming

This project integrates gesture recognition as a drone control mechanism to provide the drone with a certain degree of autonomy respect to its operator, which is specially useful in military operations. The application has been implemented in Python using mainly OpenCV and OpenPose libraries.

Drones for video surveillance applications

The final objective of this project is to provide autonomy to a drone, in order to do video surveillance tasks with it. The main tasks are people detection and people tracking using the video stream given by the onboard drone camera. To do this, computer vision and machine learning algorithms are used.

Real-Word anomaly detection in Surveillance through Semi-supervised Federated Active Learning

This project shows the deployment and research of semi-supervised deep learning models for the anomaly detection in Surveillance videos deployed on a synchronous Federated Learning architecture for which training is being distributed on many nodes.

Egomotion estimation methods for drones

This project integrates the bebop_autonomy package with ORB-SLAM2 in the ROS platform, to do localization and mapping using the Parrot Bebop2 drone. Applications are mainly indoor navigation and mapping.

Real-time clustering and tracking for event-based sensors

This work presents a real-time clustering technique that takes advantage of the unique properties of event-based vision sensors. Our approach redefines the well-known mean-shift clustering method using asynchronous events instead of conventional frames.

Visuoimitation - Baxter imitates movements looking at an operator

The objective of the system is to control a baxter robot using the kinect V2 and tis body structure extraction modules (body tracking) under the ROS (Robot Operating System) framework. Modules will be developed to make an interface between the robot and the Kinect body pose estimation so that the robot can imitate the movements of the operator in real time

Object manipulation with the Baxter robot

The project presents various demonstrations of pick and place tasks with the Baxter research robot, with object recognition and manipulation with objects of various shapes, sizes, and colours. It also includes trajectory and motion planning and the use of different grippers and simulation with Gazebo.

Contour detection and proto-segmentation with event sensors

This project presents an approach to learn the location of contours and their border ownership using Structured Random Forests on event-based features. The contour detection and boundary assignment are demonstrated in a proto-segmentation application

Object classification with the Baxter robot

This project shows the development of various classification tasks in static and dynamic environments with the Baxter robot based on color, using also a conveyor where pieces are transported to be classified in real time.

FPGA implementation of bottom-up attention with top-down modulation

This project presents an FPGA architecture for the computation of visual attention based on the combination of a bottom-up saliency and a top-down task-dependent modulation streams. The target applications are ADAS (Advanced Driving Assistance Systems), video surveillance, or robotics.

FPGA implementation of optical flow, disparity, and low-level features

Fine-grain pipelined and superscalar datapath to reach high performance at low working clock frequencies with FPGAs. The final goal is to achieve a data-throughput of one data per clock cycle. We show implementations of optical flow, disparity, and low-level local features